UAS Technology in Focus

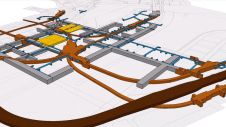

A UAS intended for mapping, inspection or reconnaissance consists of a mix of elements including aircraft, a ground control station (GCS), on-board navigation sensors, a radio link for manual control of the aircraft, one or more geodata collection sensors and a wireless link for transmitting the data recorded by the geodata collection and navigation sensors to the GCS and PC, laptop or tablet (Figure 1). Usually the aircraft – whether a fixed wing or rotary wing – will be propelled by a battery-powered electric engine. However, the depletion of the battery charge is usually counted in minutes rather than hours. As a result, an aerial survey lasting one day may require a series of batteries of which the compound weight exceeds the total weight of the other parts of the UAS. Nevertheless, today’s batteries may be powerful enough to allow a payload which is heavier than the aircraft itself. For mapping and inspection purposes, the on-board sensor will be a high-resolution RGB camera, a Lidar system, a near-infrared or thermal-infrared camera, a video recorder or a combination of these sensors.

Piloting

Since no human operator is on board, the aircraft has to be controlled by remote piloting using a radio link or (semi-)autonomously. Remotely piloted vehicles (RPVs) require continuous input from a human operator on the ground, who has a wireless connection to the UAS through the GCS. The images or videos captured by the on-board camera are transmitted to the computer through a real-time data downlink so that the operator on the ground may have a pilot’s-eye view as if from inside the cockpit. Today, a UAS is usually controlled by a single person and can operate semi-autonomously, sometimes even up to the level of automatic take off and landing. The operator only intervenes when the UAS encounters an obstacle or other potential threat. The camera or other geodata collection sensors may be mounted such that they look nadir or may be attached to a tray which can revolve around one, two or three perpendicular axes so that the surface can be observed from a variety of angles. In order to preserve a fixed viewing angle under aircraft weltering, the mount may be equipped with an in-built stabilisation facility. Viewing angle, image exposure, zoom and other operating parameters of the sensor will be controlled by the pre-loaded flight plan, but the human operator may intervene through the GCS if necessary.

Eye Contact

The trend is towards more autonomous systems. Autonomous guidance means that the on-board computer fed by sensor inputs is in full control, rather than a human operator. While fully autonomous guidance is currently used for military operations, the control of a human operator remains essential for civilian use. In theory an autonomous flight requires no human input after take off, but in practice the operator will maintain eye contact with the aircraft during the entire flight. The use of a flight guidance system is now common, allowing semi-autonomous flights. Once airborne the UAS will be steered by an autopilot using the pre-loaded flight plan; in other words, the aircraft follows a set of pre-programmed waypoints. The core sensors enabling auto-piloting are small GNSS receivers and solid-state gyros, possibly accompanied by a barometer to measure height above ground and a compass to measure heading of the aircraft.

Expect the Unexpected

Unexpected situations can always arise, such as a sudden heavy wind, running out of power or facing a close encounter with another (flying) object; therefore, sensors should be able to detect the unexpected in order to avoid collisions or crashes. Many systems are not only able to detect the unexpected but also able to determine the correct course of action, such as manoeuvring the aircraft back to the take-off site, identifying a suitable place to land or – in the extreme case – arranging a soft crash which may result in damage to the UAS but avoids injuries to humans and animals, thus potentially saving lives. A semi-autonomous UAS is also able to monitor and assess its own ‘health’, status and configuration within its programmed limits. Hence, on-board sensors may detect a defective motor or damaged propeller of a multicopter and adjust the other rotors to compensate for the defects to ensure that the UAS remains stable in the air and thus under control.

Make your inbox more interesting.Add some geo.

Keep abreast of news, developments and technological advancement in the geomatics industry.

Sign up for free